In a previous blog post, we described our human-centered approach to AI and reflected upon our learnings from the 2-year AIGA research project around responsible AI. In this post, we will present the method we have developed for supporting the design and development of trustworthy AI solutions, particularly considering the end user perspective.

A lack of trust around AI

People and organizations sometimes struggle to completely trust AI systems, despite their ubiquitous presence in our work and everyday life. People address their mundane ponderings with global search engines via mobile phones, while their life experiences are constantly influenced by personally targeted ads. Even electronics found within the home now optimize themselves with automated algorithms.

One reason for this distrust may be that AI systems are perceived as black boxes. They provide decisions, predictions, or recommendations, yet fail to explain how they arrived at them. Think about the online loan application required by your bank. This may tell you that you can’t get a loan to buy a house, without explaining why or allowing you to change the parameters to get the desired decision.

In some cases, these systems treat people unfairly due to their inherent biases, which reflect past societal structures, social practices, or attitudes. For example, a female spouse is often awarded a lower credit limit from banks, despite having shared assets with their husbands or having a better credit score than their male partners.

In addition, AI applications may not provide people with the awareness and/or control of the existing underlying automation, leading to suboptimal, or in the worst-case unfortunate situations. Due to the aforesaid reasons, people are often left powerless and may even feel betrayed.

To earn Trust AI should be Trustworthy

Trust and trustworthiness have been the core theme for many public organizations and private companies, such as Deloitte, KPMG, EU, and OECD, in their ethical AI frameworks. All of them are based on the premise that trustworthy systems must behave according to their stated principles, requirements, or functionalities.

Many of these existing frameworks apply top-down predefined sets of ethical principles and processes when designing AI systems or evaluating their trustworthiness. Their strength is in making sure that all previously recognized requirements get addressed systematically, regardless of individual evaluators and situations. Among them, the most prominent non-commercial methods are the Z-Inspection method and the EU checklist.

In our experience, predefined top-down and compliance-oriented approaches tend to be laborious and otherwise challenging, especially for small and medium business. Also, predefined assessments can miss alternative views outside of their planned scope in addition to specific situational details of the user's interaction with AI.

The above issues are particularly relevant challenges, as trust and trustworthiness are a multi-perspective dilemma, considering individual and contextual factors, contradictory valuations, and complex prerequisites. People and organizations recognize, reason, and prioritize individual factors differently, and they may even change their priorities in diverse situations.

Therefore, in contrast to existing frameworks, we decided to develop a bottom-up approach that explores a specific use case and the individual users of an AI system. The aim is to ascertain how people view certain contextual or individual factors during decision-making situations. Therefore, we did not want to predefine what type of requirements or metrics should be assessed, but instead chose to explore topics and concerns as widely as possible.

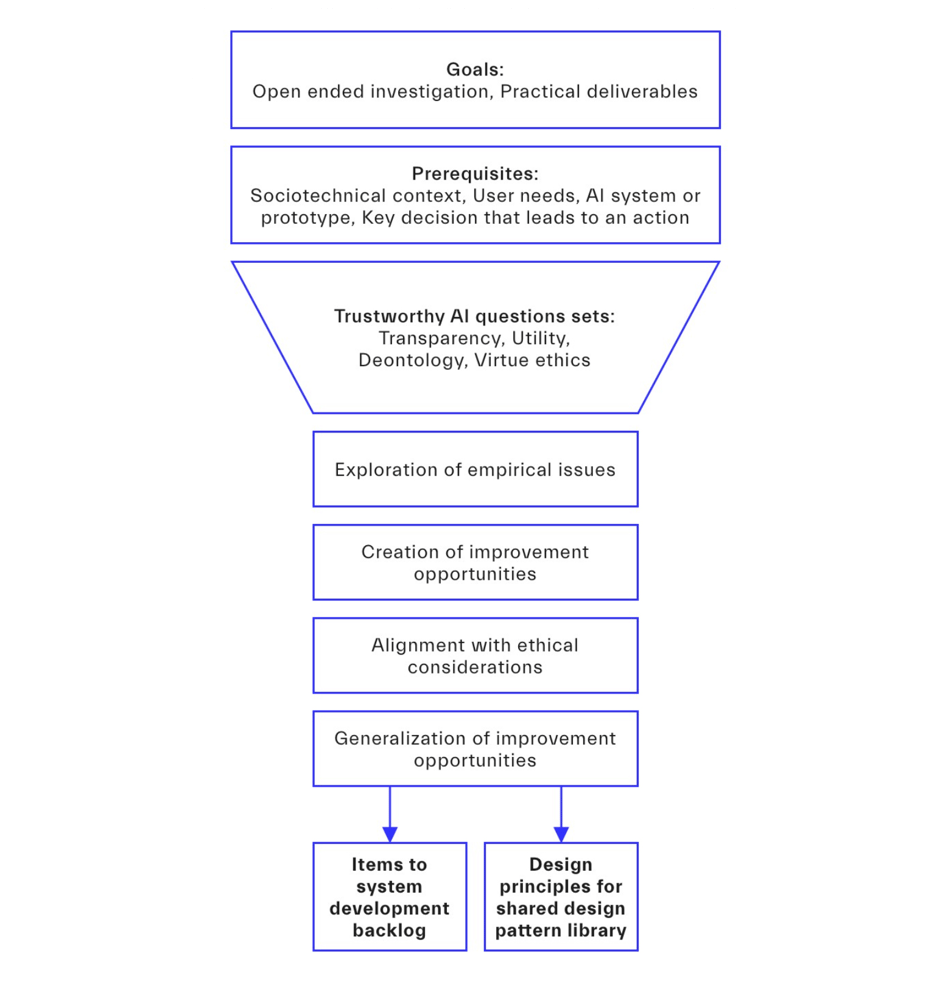

We also wanted to develop a practical and repeatable method that could be applied in real-life business settings. The approach should help to identify pragmatic improvement opportunities that could be more easily included in a system development backlog, then implemented in organizational reality, and generalized within a shared design pattern library. The core idea was not to evaluate conformance to predefined objectives, but to derive new objectives that could be implemented to help AI users in their use case and subsequently generalize learnings for other use cases (Figure 1).

Figure 1 – Method process diagram.

Making AI more Trustworthy in practice

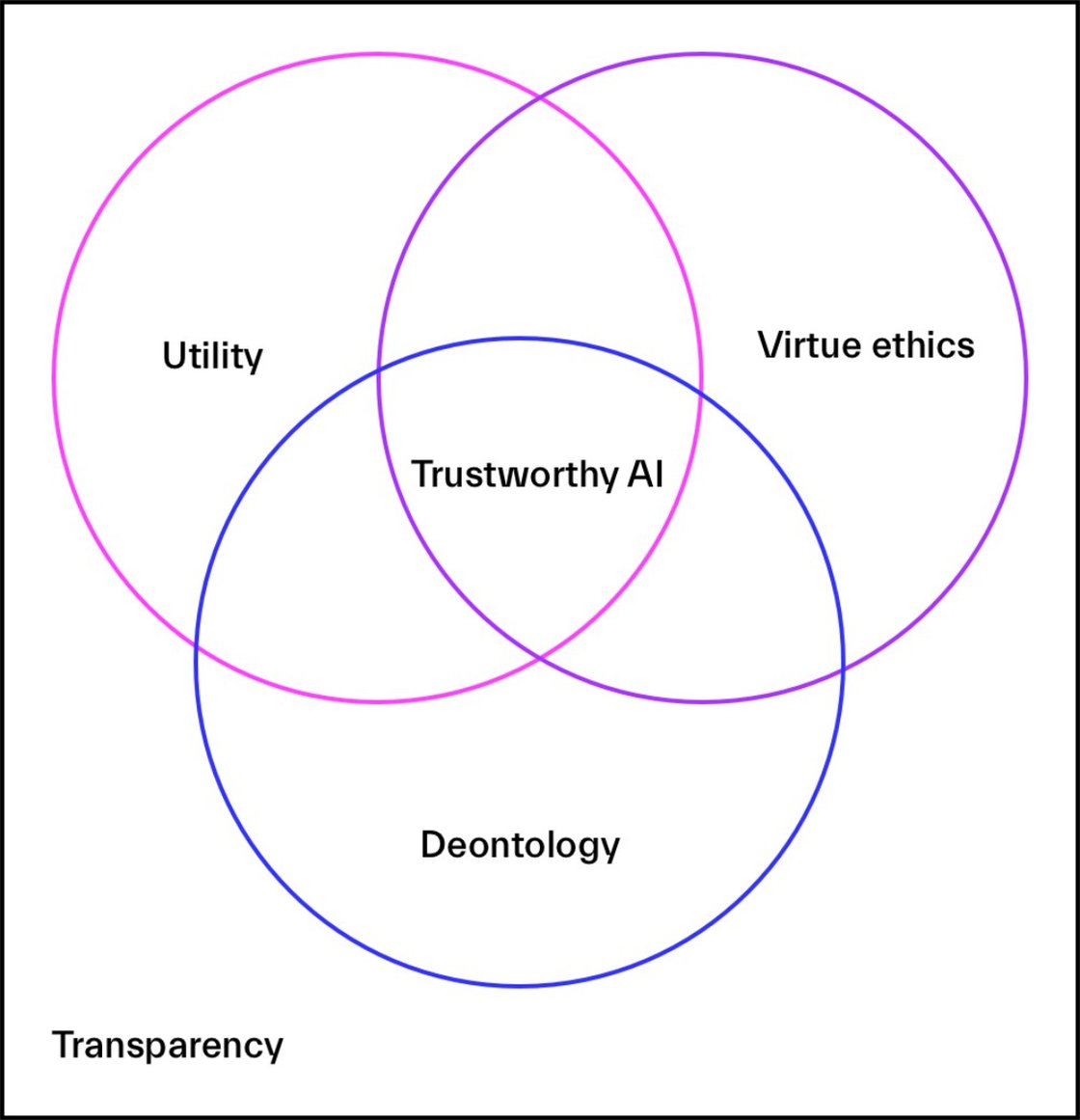

The method we developed consists of four sets of questions, each one exploring the AI use case from its own perspective (Figure 2).

Figure 2 – Trustworthiness framework.

The first perspective focuses on assessing users’ perception of a system’s transparency. In our view, AI systems should be explicit about their inner operations and the decisions or recommendations they give people. AI systems should also provide all the necessary information required to allow people to fully understand the reasons behind such results. Only then can people make the appropriate use of them.

The second, third, and fourth aspects focus on three alternative perspectives of normative ethics, which people might consider before they trust something. The questions assess people’s perception of an AI system’s consequences (utility), compliance with rights and responsibilities (deontology), and alignment with values (virtue ethics). In our view, AI should benefit people, be compliant with relevant rules and regulations, and follow ethical principles.

In total, our method comprises 26 open-ended questions that aim to explore decision-making situations from alternatives perspectives that we think are important for achieving trustworthy AI. Its usage requires a real situation in which users receive a decision, recommendation, or prediction from an existing AI service, system, or prototype and need to make an action after receiving the result. To obtain a wider perspective, the interviewers should also consider the main stakeholders involved in the business process supported by the AI.

After the interviews, empirical findings must be analyzed holistically to recognize issues that concerned users and other stakeholders. The understanding gained from the interviews helps to derive improvement opportunities that will be formulated as backlog items for systems development or organizational practice. This can be obtained by means of traditional design thinking techniques, rather than following a straightforward technical process.

To help make improvement opportunities a new reality in the future, AI services should be viewed as a worthy investment. To support this goal, we feel that each opportunity for improvement should be grounded within an organization’s internal needs and cultural values and outline how it contributes to its business operations. The more significant reasons from various perspectives can be linked to the backlog item, the more likely it will be included to active epics and sprints.

Improvement opportunities can also be synthetized into more generic design principles. In this way, the empirical learning and design insights can be applied to other organizational AI systems and be part of a knowledge base for wider benefits.

Example

To test our method, we applied it to evaluate the perceived trustworthiness of the AI recommendation system we use in our organization to support consultants’ allocation management tasks. The tool, called Siili Seeker, allows sales managers and consultants’ supervisors to enter a text description of a customer’s need for a task, project, and/or service. It then extracts relevant keywords and compares them to the experience and skills that consultants previously entered in their profiles through the competence management system in use at Siili. As result, Seeker returns a ranked list of consultants, including a score and a list of matching skills as keywords. When sales managers or supervisors find a suitable candidate, they can reserve or allocate them to a specific customer project.

By interviewing sales managers, consultants’ supervisors, and consultants themselves, who do not use the tool but are a central part of the allocation process, we could identify a wide range of practical issues related to our AI solution. We then turned them into improvement opportunities, which we further generalized into design principles.

As an example, considering the utility perspective, our interviews highlighted that Seeker does not recommend changing consultants’ project allocation to more suitable projects. Thus, an improvement opportunity could be that Seeker should recommend a project change when sufficient conditions suggest a better fit.

Implementing this improvement opportunity could provide many benefits. Consultants’ vocational satisfaction could increase due to better opportunities to follow their working passions, and customers could get better outcomes from the projects due to more optimal consultants on their projects. Finally, the consulting company could reduce the risk of consultants changing employer for a more interesting project.

Conclusion

As the above example outlines, our developed method can be used to assess trustworthiness of AI prototypes or systems from the perspective of its users. In contrast with predefined frameworks, our bottom-up approach can help to gain a wider and more open understanding of users’ situational and ethical considerations.

However, it is equally as important to use creative design thinking to turn ethical considerations into practical improvement opportunities that can be added as items to a system development backlog. Improvement opportunities should not exist as well-meaning, yet vague statements in administrative documents.

Needless to say, backlog items that are considered more important are more likely to be included in future development sprints. For this purpose, our open-ended exploration can deliver a vivid set of ethical considerations relevant for different stakeholders. These empirical findings can therefore be used to reason the significance of improvement items from various complementary angles.

What’s worth pointing out is that our practical method can be applied to various stages of AI development. With this in mind, we would relish the opportunity to partner with you to see how this can support the further iterations of your AI prototype, assess, and enhance trustworthiness of your existing AI solution, or compare results with previous assessments carried out with top-down frameworks.

If you are keen to read more about our method and its application, here is the link to the paper which discusses the topic in greater detail (from the International Journal of Human-Computer Interaction, published with open access).

.png)