Across industries, organizations are discovering that the skills needed to build a compelling AI proof of concept are fundamentally different from those required to operate AI reliably at scale. The data scientist who built your model probably isn't the right person to monitor it at night when inference latency spikes. And the infrastructure team that keeps your ERP running has never dealt with model drift, vector database scaling, or token cost explosions.

This gap between “it works in the PoC” and “it works in our business” is where most AI initiatives stall. It's also where a new service category is emerging: Managed AI.

To understand why Managed AI is becoming essential, this article explores the operational realities of production AI, defines what Managed AI includes, examines how the service works in practice, and highlights the build-versus-partner decision many organizations face.

Table of Contents

The Production Problem Nobody Warned You About

Building a proof of concept is genuinely exciting. You pull together a small team, experiment with models, connect some data, and within weeks you have something that feels almost magical. Leadership gets excited. Budget gets approved. Then reality sets in.

Production AI introduces operational complexity that catches most organizations off guard. Models that performed brilliantly in testing start delivering inconsistent results after a few months. The cloud infrastructure that seemed straightforward during development becomes a tangle of scaling policies, cost allocations, and security configurations. Data pipelines that worked fine with sample datasets struggle to maintain quality and freshness at production volumes.

And then there's the inevitable incident. Something breaks on a Friday evening. The model starts hallucinating. A misconfigured pipeline corrupts your vector store. Costs spike because autoscaling triggered unexpectedly. Suddenly, your AI initiative isn't a success story anymore – it's a fire drill.

The uncomfortable truth is that most organizations aren't equipped to handle these challenges. Not because they lack talent or ambition, but because running AI in production requires a combination of specialized skills, tooling, and operational discipline that takes years to develop in-house.

Defining Managed AI

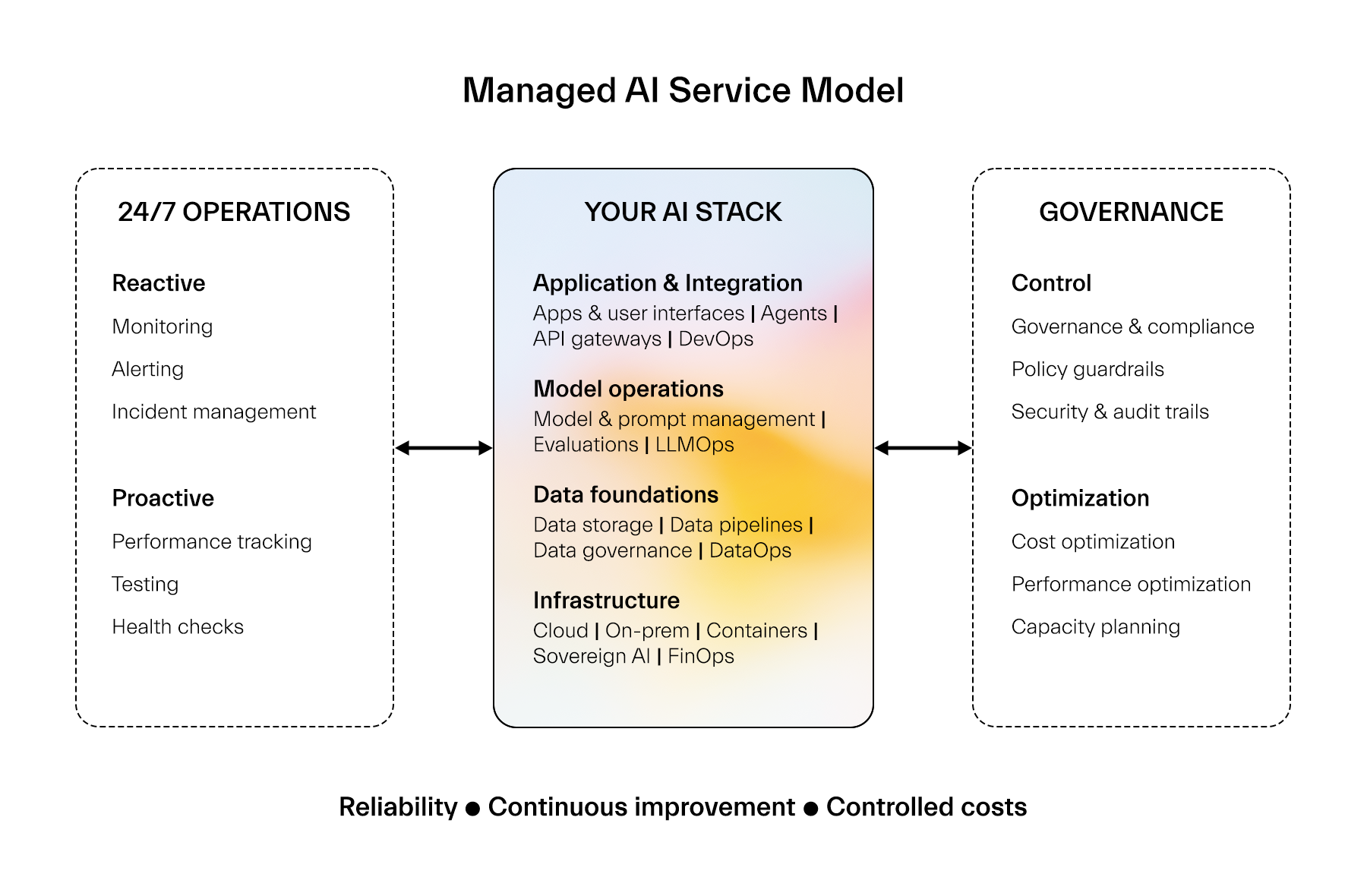

So what exactly is Managed AI? At its core, it's a turnkey service to run and improve AI solutions in production, spanning the full stack from cloud infrastructure through data pipelines to model serving and application integration.

A Managed AI provider takes responsibility for ensuring your AI models and solutions are deployed safely, operate reliably, remain secure and compliant, and improve continuously over time. This includes managing the underlying cloud platforms, maintaining the data foundations that feed your models, and optimizing the inference infrastructure that serves your users.

Before your solution goes live, they help you check all the readiness boxes from infrastructure architecture to data governance to model evaluations. Once live, they handle daily operations: managing compute resources, keeping data fresh and properly governed, monitoring quality and costs, responding to incidents, all under agreed service levels.

This isn't about outsourcing your AI strategy. You still own the vision, the use cases, the business outcomes you're driving toward. Managed AI provides the operational foundation that allows your AI investments to deliver reliable value.

The operational realities

Let's get specific about what production AI actually demands.

Key realities include:

- Model performance degrades over time.

The data your model was trained on reflects the world at a specific moment. Without continuous monitoring and periodic retraining, you're making decisions based on increasingly stale intelligence. - Infrastructure complexity compounds quickly.

Production AI systems typically involve orchestrated containers, GPU clusters, vector databases, caching layers, and API gateways — each requiring configuration, monitoring, and optimization. - Data foundations require constant attention.

Source systems change. Formats evolve. Quality degrades. Embeddings need refreshing as content updates. - Costs become unpredictable.

Compute, storage, API calls, token usage — these costs scale with adoption, but not always linearly. - Security and compliance demand maturity.

GDPR, industry-specific regulations, internal governance policies — all apply to AI systems. - AI incidents behave differently.

A database going down is obvious; a model producing subtly wrong outputs is harder to detect and potentially more damaging.

What the service actually looks like

Managed AI isn't a black box where you hand over control and hope for the best. It's a structured approach to operational excellence across the entire AI stack.

Before go-live, the focus is on production readiness:

- infrastructure architecture review

- data pipeline validation

- security assessments

- model performance evaluation

- integration testing

Once live, daily operations span multiple layers from AI infrastructure to applications.

Infrastructure operations

Infrastructure monitoring ensures compute resources perform reliably and scale appropriately. Cloud settings are tuned as usage patterns emerge. Multi-cloud or sovereign AI deployments introduce additional complexity that must be managed continuously.

Data operations

Data operations maintain pipeline health, content freshness, and governance compliance. As content updates, embeddings require regeneration; as source systems evolve, pipelines must adapt.

Model operations

Model operations track performance, detect drift, and trigger retraining when needed. When something goes wrong at any layer, defined procedures and clear escalation paths ensure rapid resolution.

Continuous improvement happens across the stack: infrastructure tuning, data pipeline refinement, prompt adjustments, and cost optimization.

The Build-Versus-Partner calculation

Every organization eventually asks: should we build these capabilities ourselves or work with a managed service provider?

Building a mature AI operations capability internally takes 18 to 24 months under optimal conditions. It requires hiring specialists across infrastructure, data engineering, and ML operations in a competitive market. It means developing tooling and processes from scratch and sustaining investment.

The managed approach offers flexibility that in-house teams struggle to replicate. Need full-stack operations covering infrastructure through model serving? That's an option. Requiring a sovereign AI infrastructure? No problem. Prefer a co-managed model where your team handles cloud infrastructure while the provider focuses on AI-specific operations? That works too.

Certifications like ISO 42001 signal that a provider has invested in governance and responsible AI management systems.

Taking the next step

If you're navigating the transition from AI pilot to production, start by honestly assessing your operational readiness across the full stack. Do you have the skills to manage AI infrastructure? Do you understand total cost of ownership beyond initial development? Are your data foundations solid enough to support production workloads? Can you monitor and improve models continuously?

The organizations that thrive with AI won't be those with the most impressive demos. They'll be the ones who figured out how to make AI work reliably, every day, across every layer of the stack.

If you'd like to explore what production readiness looks like for your specific situation, we're happy to have that conversation. Let’s talk!

About the author |

|||

|

Toni Petäjämaa |

|||

|

Toni Petäjämaa is a seasoned expert in digital innovation, business development, and strategic leadership. At Siili, he helps clients turn long-term partnerships into practical growth by combining modern technologies with a strong focus on service design and continuous improvement. With a hands-on approach and a talent for simplifying complexity, Toni leads cross-functional teams, supports sustainable business outcomes, and keeps learning at the forefront—especially in areas like AI, business design, and cloud solutions. |

|||

.png)